As I said in my first post on this blog, I want to try to add value to the world of Scientology activism by building a site for deep analysis, initially my own and, over time, building a community of like-minded folks to work together.

Why? Because analysis is the prelude to action. If you can do the following:

- Understand the problem better

- Identify a wider range of possible solutions

- Predict accurately the potential results of each different solution scenario, especially by minimizing the chances that you’ll miss something leading to unintended consequences,

- Apply enough analytical rigor to choose the best solution, and

- Do that as quickly as possible

…you can significantly improve the outcome of whatever you’re doing, whether that’s winning a war, beating your competition in business, picking stocks, or doing any one of a hundred different things in a dangerous, competitive world.

What is Analysis?

Most people don’t understand what analysis is and where it fits in the different ways to think about Scientology. In this post, I’ll try to position analysis against the other types of writing that people have done about the cult. I will also try to show how deep analysis can help us work together more effectively as we try to oppose the evils of Scientology.

Analysis is the prelude for action. It’s not a pointy-headed intellectual exercise. If you can gain some unique insight into the problem you’re solving, you can identify a broader range of possible solutions and arrive where you’re headed faster and more efficiently than your competitors. Long-term competitive advantage is a function of doing this better than other people a little bit at a time for years at a time.

Fundamentally, analysis is based on facts, which are then refined by experience, logic and judgment, into a testable series of predictions about the future. These predictions, unlike predictions from theories of physics or other sciences, are not necessarily exact. But they should be testable, if for no other reason than to make sure that the analyst has some skin in the game to be accountable for the quality of their work. The end product is an opinion but it is a highly credible opinion. And it is an opinion that still has value even if the scenario predicted doesn’t come to pass.

A great example of the positive impact of good analysis is in the world of building software. In the classical “waterfall model” of software project management, projects move through five steps: analysis, design, implementation, testing and post-release maintenance. A well-known truism says that it costs 10x as much to fix a problem in a given stage of the process than it does in the prior stage. It’s also true to say that an error in analyzing the problem and potential solutions is 100x more expensive to fix if it’s discovered during implementation. So in that domain, the economics of good analysis are compelling.

The Elements of Good Analysis

Among the key elements of good analysis are:

Testable conclusions: The end product must include clear, unambiguous predictions of some future event, in a way that the conclusion will be verifiable. You have to be able to determine whether you were right or wrong.

A statement that “Microsoft will have a good quarter” isn’t terribly useful, nor is it testable. On the other hand, consider this statement:

“We now believe Microsoft will report earnings per share of $0.03 to $0.05 above the current consensus earnings estimate. We expect upside to be driven by Internet search revenue coming in approximately $100 million above our former estimate, but more importantly, by lowered capital expenditures in that unit, coming from tighter expense controls. If we are correct, we expect the price/earnings multiple on the stock to expand slightly as investors are relieved that the Company is becoming more consistent in applying expense controls, and we would expect the stock to rise from the current $37.66 to around $39.50 in the short term after the earnings release.”

That’s a highly testable scenario. It gives five specific predictions, and each one of them can be clearly examined to determine whether they’ve come to pass. There are hints of what might go wrong if the prediction fails — the stock won’t go up in price even by the 5% we are expecting here.

Arguments and data that add value even if the conclusion is wrong: Analysis is not like betting. You have to accept that even your best work won’t get the conclusion right all the time. And you also have to accept that you might get the conclusion right but your scenario showing how you got to the conclusion will be off, or vice versa. It’s like a complicated problem in algebra: you get marked off a little if you get the answer wrong, but you lose all the points if you get the answer wrong and you don’t show your work.

I have been recognized by peers on many occasions for doing great work even if I’ve gotten the conclusion wrong, because my thought process provided a new way to look at the operations of a given company, or because I was able to discover some data about the company’s operations that no one else had.

One of the key things about formulating a good argument is the notion of intellectual honesty. It usually ends badly if you formulate an argument designed to reach a particular conclusion, but you intentionally omit facts that undermine your case. In my business, it’s a certainty that anyone who tries to hide known facts to take a shortcut to making their case will get caught by some sharp-eyed client who will then dismember you. It’s like a district attorney prosecuting a case: he can’t hide evidence from the defense that would contribute to finding a defendant innocent. In other words, he has to prove his case while disclosing as much information as possible to help the opposition prove theirs.

It’s similar here. Intellectual honesty demands that we acknowledge what Scientology has done right as well as attacking what they do wrong. After all, the cult survived the imprisonment of almost a dozen members of senior management following Operation Snow White in 1977. It survived several epic legal setbacks in the 1980s and 1990s, and it survived the Mission Holder Purge among other changes when David Miscavige took over. They had to have been doing something right to stay alive then, when other organizations would have been mortally wounded. Thus, the “cult collapse scenario” (first version yet to be written as of publication date) would have to acknowledge and account for why those events were not fatal in order to be credible in establishing that the current scenario may actually result in the cult shutting its doors forever.

The right amount of rigor for the available time frame: Analysis is sort of like science, with one difference: with science, predictions have to be right all of the time. Kepler’s laws of orbital mechanics work 100% of the time. That’s why those are laws and the process of deriving them is science.

Analysis tries to apply science-like rigor to much squishier problems than physicists might try to solve. But every problem is different, and part of the job of a good analyst is to figure out how much work to put into a solution. A highly precise answer a day late is useless on Wall Street, though that may not be much of a problem in other types of analysis, like legal research or drug trials, where safety is paramount and the length of time to complete a trial is secondary.

So part of the process is figuring out how much rigor you need. Sometimes, back-of-the-envelope estimates are all you have time for, but even those have a certain amount of rigor behind them; I’ll cover back-of-the-envelope exercises (which are a lot of fun) in one of the first articles I do on analytical technique. Other times, you need detailed analysis that covers a substantial portion of the sample space, and analysis approaches science in needed rigor.

A commitment to improving the process: Because we’re dealing with a human process, it’s important to think about how to improve the process. After every failure, and after every success, it’s important to debrief and spend at least a little time to figure out what worked and didn’t work. I try to do this with analysts on my team, particularly after a notable success or failure. This is one of the key lessons of the aerospace industry: after every crash, the government investigators work to figure out the cause of the crash, and then work to ensure that no future crashes occur because of that particular problem. I’ve written tons of mea culpa research reports to clients after I’ve blown a call in a particularly egregious way. Nobody expects me to be perfect; they just expect me to be diligent, and one of the ways you show your diligence is by accurate self-analysis when you go wrong.

An ability to admit you’re wrong: A big part of being a good analyst is to get your ego out of the way. If you understand that the goal is to do good work, creating useful conclusions as well as well-supported arguments to get there, then you’re doing a good job. Staying humble in the face of the difficulty of the task helps. Admitting you’re wrong and taking clearly visible steps to get it right next time is key. And having compassion for others who get it wrong after doing a good job is important. That’s why avoiding the easy temptation for ad hominem attacks is really important if we’re going to do serious work here. I have successfully managed people I dislike intensely on a personal basis, but if they’re doing the work thoroughly and honestly, and if they admit they’re wrong, I will treat them professionally and respectfully and I will pay them well, even if I am invariably ready to smack them when they come into my office to complain about something.

There’s an unspoken agreement that’s necessary for survival in stock picking: criticize my work, don’t criticize me. As long as I’m diligent and useful, you do not have the right to engage in an ad hominem attack. Conversely, in my world, leading with an ad hominem attack is seen as a virtual guarantee that the attacker has no game and is engaging in a very poor attempt to cover it up.

Analysis versus News

News and analysis are highly complementary but they are not the same. We in Global Capitalism HQ depend on accurate news reporting about companies and about external events (Fed actions, etc) as a cornerstone of investment decisions. We spend a certain amount of time thinking about whether a given news story is credible, and we have opinions about certain reporters in our respective areas of expertise. I loved certain Wall Street Journal reporters and trusted them implicitly, but others I wouldn’t trust as far as I could throw them, because they had areas where they were completely unable to understand the real issues they were covering.

News is about answering the classic questions about an event: who, what, where, when and why? It’s about delivering the most relevant facts about the past. A news reporter’s job is to focus on the past, and not to get distracted by the future. Typically, of course, a good news reporter will interview experts who do have an opinion about the future and will quote them extensively in looking at the significance of the event. But it is not the news reporter’s job to express his own opinion about the future, even though a good reporter is close enough to the story that he will undoubtedly have an opinion.

Tony Ortega is a good journalist because he goes to appropriate lengths to keep his personal opinions out of the story. Sure, Tony has opinions about the issues he covers; that’s how he is able to figure out when a particular source is lying or telling the truth. In journalism, the writer is not allowed to say what he thinks, though he may slant the story by focusing more on sources on one side of the issue versus another. The degree to which reporters do this honestly factors into their long-term credibility, of course.

In looking at whether a news reporter is wrong, it is fairly straightforward to determine: did the reporter ignore or omit key available facts in reporting the story that would have been available to a competent reporter?

Getting a news story wrong is a big problem, but in analysis, as long as you put in the work and do your best work, it’s disappointing but not fatal. In that respect, analysis is like baseball. In the 2013 season, Detroit’s Miguel Cabrera led the league with a .341 batting average. That meant he failed to get on base almost two out of three times he stood at the plate, but he was better than anybody else last year. But football is more like news reporting: getting a story wrong is bad for the career in much the same way as missing a field goal from the five yard line would be in the NFL. You’re just not supposed to miss those if you’re in the pro’s, even if it’s 15 below and snowing like mad in Green Bay.

Analysis Versus Opinion

All analysis is opinion, but relatively few opinions count as analysis.

Everyone has opinions. Most people tend to think their opinions are inherently damned smart ones, otherwise they would have different ones. In other words, their egos are tied up with opinions. That’s fine when you’re thinking about your favorite basketball team or which one of the supermodels is the most beautiful. Those are hard arguments to win with facts. But it’s really dangerous to treat opinions as true when you’re trying to make your opinions bring about something big in the world.

You’ll notice that I work very hard on Tony’s site to qualify statements I make as verifiable fact, as analytical opinions with a high confidence level (often indicated by reference to a document, or with terms indicating my confidence level), as a hypothesis, as an educated guess, as a supposition, or as merely a speculation (almost a fantasy).

For example, in a comment on Tony’s blog today, I observed that if Tommy Davis really was in NYC, as part of his job traveling as an assistant to the chairman of his real estate investment fund, it seems likely that he’d be spending a lot of his time in LA, but if his wife is still living in Texas, is there a possibility that they’re divorced? I specifically said that this was a potential inconsistency in the facts that bears further checking, and that it was not intended to start a rumor that they’re divorced, and that it certainly wasn’t fact. I clearly stated my level of confidence in what I wrote — which was zero, since it was really a question. That’s really important in analytical work. The CIA and other intelligence agencies have a very specific standard for the verbiage they use to express their confidence levels in various things that they write. We on Wall Street are a bit less formal, but it is still important.

Analysis Versus Lulz

All of what I’ve written above has been deadly serious. But that’s not to imply that analysis is inherently serious, and indeed, boring. If you can be funny in a research report, it sure helps to make your conclusions jump out at the reader, if the humor is built on solid logic.

And sometimes, the lulz that the Anons post can actually point the way to finding out and identifying deeper truths about Scientology. The finely honed sense of the absurd that keeps many Scientology protesters engaged can actually help give insight into the cult, particularly how its members behave.

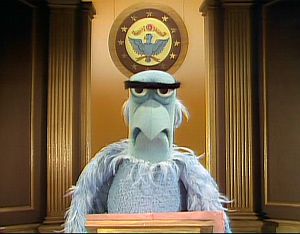

Though a lot of what I’ve written so far on this blog has been serious, I’m not like Sam the Eagle from the Muppets, utterly humorless and perennially serious. In fact, over my career, I’ve been admonished to dial down the humor far more than most people that do my job. Here, I’d like to encourage wit and humor to be part of what we end up doing, though not if it comes at the cost of good thinking and good data.

Outside References

There’s not much written about an introduction to Wall Street analysis, since the field changes fairly rapidly, and since most people just learn on the job. But it’s fun to read about military analysis and try to understand the general thought process, even if the mechanics of producing military and political intelligence is way too cumbersome for what we’ll try to do.

- The Wikipedia page on Intelligence Analysis gives a pretty good overview of the research process in a discipline that is more rigorous and slower-moving than what we’re trying to do. It’s a good place to start to indulge your inner James Bond as you think about how to add rigor to what we do. I’d suggest reading this and looking at some of the referenced articles, such as the NATO Open Source Analysis primer below.

- The NATO Open Source Intelligence primer gives some understanding of the process of “open source” intelligence (not the same as open source software), which uses publicly available information such as newspapers and broadcast media to figure out what a potential adversary’s intent is. It’s all about looking for patterns in disparate sources and having a good process. Unfortunately, this document is written in Eurocrat-speak, which is even worse than most military bureaucracy, and it’s a bit dated, but still useful.

- A bibliography page at the USAF “Air University” training center has a ton of reading on intelligence and related topics. A lot of the books in the sections titled “Intelligence Basics” and “Intelligence Analysis” are classics in the field. Knock yourself out on some of these. Of these, I’d particularly recommend:

- The Psychology of Intelligence Analysis, by Richards J. Heuer, Jr., published in 1999 by the CIA and released in the public domain.